06 部署日志系统

发布于2021-05-29 22:36 阅读(1234) 评论(0) 点赞(10) 收藏(4)

kubernetes部署ELK Stack

提供者:MappleZF

版本:1.0.0

一、部署ElasticSearch

1.1 下载

官网:https://www.elastic.co/cn/

官网下载地址:https://www.elastic.co/cn/downloads/elasticsearch

[root@k8smaster01:/opt/src]# wget -c https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.9.0-linux-x86_64.tar.gz

[root@k8smaster01:/opt/src]# wget -c https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.12.tar.gz

1.2 安装JDK11

注:ElasticSearch 7.X 版本需要JDK11支持

jdk下载地址请官方下载

[root@k8smaster02.host.com:/opt/src]# tar -xf jdk-11.0.8_linux-x64_bin.tar -C /usr/local/

[root@k8smaster02.host.com:/opt/src]# cd /usr/local/ && cd jdk-11.0.8/ && ls

bin conf include jmods legal lib README.html release

1.3 安装Elasticsearch

[root@k8smaster02.host.com:/opt/src]# tar -xf elasticsearch-7.9.0-linux-x86_64.tar.gz -C /data/

[root@k8smaster02.host.com:/opt/src]# cd /data/ && mv elasticsearch-7.9.0 elasticsearch

[root@k8smaster02.host.com:/data]# mkdir -p /data/elasticsearch/{data,logs}

由于我们日常的代码开发都是使用的JDK1.8,所以这里不会把JAVA_HOME配置成JDK11,我们只需更改Elasticsearch的启动文件,使它指向我们下载的JDK11.

1.4 配置Elasticsearch

1.4.1 修改elasticsearch/bin/elasticsearch

[root@k8smaster02.host.com:/data/elasticsearch/bin]# vim elasticsearch

添加如下内容:

#!/bin/bash

# CONTROLLING STARTUP:

#

# This script relies on a few environment variables to determine startup

# behavior, those variables are:

#

# ES_PATH_CONF -- Path to config directory

# ES_JAVA_OPTS -- External Java Opts on top of the defaults set

#

# Optionally, exact memory values can be set using the `ES_JAVA_OPTS`. Example

# values are "512m", and "10g".

#

# ES_JAVA_OPTS="-Xms8g -Xmx8g" ./bin/elasticsearch

#配置自己的jdk11

export JAVA_HOME=/usr/local/jdk-11.0.8

export PATH=$JAVA_HOME/bin:$PATH

#添加jdk判断

if [ -x "$JAVA_HOME/bin/java" ]; then

JAVA="/usr/local/jdk-11.0.8/bin/java"

else

JAVA=`which java`

fi

source "`dirname "$0"`"/elasticsearch-env

.........(省略号)........

1.4.2 修改elasticsearch/config/elasticsearch.yml

root@k8smaster02.host.com:/data/elasticsearch/config]# vim elasticsearch.yml

修改如下内容:

cluster.name: es.lowan.com

node.name: k8smaster02.host.com

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

bootstrap.memory_lock: true

network.host: 192.168.13.102

http.port: 9200

注意:如果部署的是单节点的话。还需要修改下列内容

不然会报如下错误:[1]: the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

ERROR: Elasticsearch did not exit normally - check the logs at /data/elasticsearch/logs/es.lowan.com.log

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.seed_hosts: ["host1", "host2"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

cluster.initial_master_nodes: ["k8smaster02.host.com"]

#cluster.initial_master_nodes: ["node-1", "node-2"]

#

1.4.3 优化jam.options

[root@k8smaster02.host.com:/data/elasticsearch/config]# vim jvm.options

修改如下内容:

# 内存足够的话,可以考虑最大给到32G内存

-Xms12g

-Xmx12g

## GC configuration

## 原有的注释了,添加了G1回收器,原因是经典款11支持的垃圾回收器是-XX:+UseG1GC

#8-13:-XX:+UseConcMarkSweepGC

#8-13:-XX:CMSInitiatingOccupancyFraction=75

#8-13:-XX:+UseCMSInitiatingOccupancyOnly

-XX:+UseG1GC

-XX:CMSInitiatingOccupancyFraction=75

-XX:+UseCMSInitiatingOccupancyOnly

1.4.4 创建普通用户

注:ES使用root用户是无法启动的

[root@k8smaster02.host.com:/data/elasticsearch]# useradd -s /bin/bash -M elasticsearch

[root@k8smaster02.host.com:/data/elasticsearch]# chown -R elasticsearch:elasticsearch /data/elasticsearch

[root@k8smaster02.host.com:/data/elasticsearch]# id elasticsearch

uid=1001(elasticsearch) gid=1001(elasticsearch) 组=1001(elasticsearch)

1.4.5 修改文件描述符

[root@k8smaster02.host.com:/etc/security]# vim /etc/security/limits.d/elasticsearch.conf

elasticsearch soft nofile 1048576

elasticsearch soft fsize unlimited

elasticsearch hard memlock unlimited

elasticsearch soft memlock unlimited

~

1.4.6 优化内核

[root@k8smaster02.host.com:/etc/security]# sysctl -w vm.max_map_count=262144

vm.max_map_count = 262144

[root@k8smaster02.host.com:/etc/security]# echo "vm.max_map_count=262144" >> /etc/sysctl.conf

[root@k8smaster02.host.com:/etc/security]# sysctl -p

vm.max_map_count = 262144

或者用脚本

if [ `grep 'vm.max_map_count' /etc/sysctl.conf|wc -l` -eq 0 ];then

echo 'vm.max_map_count=262144' >>/etc/sysctl.conf

fi

1.5 启动elasticsearch

[root@k8smaster02.host.com:/data/elasticsearch/bin]# su -c "/data/elasticsearch/bin/elasticsearch -d" elasticsearch

[root@k8smaster02.host.com:/data/elasticsearch/bin]# ps -ef | grep elasticsearch

elastic+ 244947 1 71 22:04 ? 00:01:24 /usr/local/jdk-11.0.8/bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dio.netty.allocator.numDirectArenas=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.locale.providers=SPI,COMPAT -Xms12g -Xmx12g -XX:+UseG1GC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.io.tmpdir=/tmp/elasticsearch-3665753017343694820 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -XX:MaxDirectMemorySize=6442450944 -Des.path.home=/data/elasticsearch -Des.path.conf=/data/elasticsearch/config -Des.distribution.flavor=default -Des.distribution.type=tar -Des.bundled_jdk=true -cp /data/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -d

elastic+ 245137 244947 0 22:04 ? 00:00:00 /data/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

root 247347 137060 0 22:06 pts/2 00:00:00 grep --color=auto elasticsearch

[root@k8smaster02.host.com:/data/elasticsearch/bin]# netstat -luntp | grep 9200

tcp6 0 0 192.168.13.102:9200 :::* LISTEN 244947/java

1.6 调整elasticsearch日志模版

[root@k8smaster02.host.com:/data/elasticsearch/bin]#

注: 由于是单机单副本,所以需要如下操作,假如是三机集群就可以不用一下操作

curl -H "Content-Type:application/json" -XPUT http://192.168.13.102:9200/_template/k8s -d '{

"template": "k8s*",

"index_patterns": ["k8s*"],

"settings": {

"number_of_shards": 5,

"number_of_replicas": 0

}

}'

1.7 删除ELK索引脚本

#还未测试

#!/bin/bash

# 保留7天的日志索引

DATE=`date -d "7 days ago" +%Y.%m.%d`

# 索引的名字

LOG_NAMES=(es-nacos-server es-eureka-server)

# 遍历所有时间段的索引,并删除大于7天的索引文件,打印删除成功

for LOG_NAME in ${LOG_NAMES[*]}

do

FILE_NAME=${LOG_NAME}-${DATE}

curl -XDELETE http://192.168.56.30:9200/${FILE_NAME}

echo "${FILE_NAME} delete success"

done

二、 制作filebeat底包

官方地址https://www.elastic.co/cn/downloads/past-releases/filebeat-7-9-0

2.1 制作Dockerfile

[root@k8smaster01.host.com:/data/Dockerfile/filebeat]# vim Dockerfile

FROM debian:jessie

ENV FILEBEAT_VERSION=7.9.0 \

FILEBEAT_SHA512=a069cc0906ff95d1c42118d501edc81d8af10d77124e5b38d209201d49e6649e4e2130a270885131abda214fee72c60f0d0174ef9ba92617ecc5b2554bc2fc35

COPY filebeat-7.9.0-linux-x86_64.tar.gz /opt/

RUN set -x && \

apt-get update && \

mv /opt/filebeat-7.9.0-linux-x86_64.tar.gz /opt/filebeat.tar.gz && \

cd /opt && \

echo "${FILEBEAT_SHA512} filebeat.tar.gz" | sha512sum -c - && \

tar xzvf filebeat.tar.gz && \

cd filebeat-* && \

cp filebeat /bin && \

cd /opt && \

rm -rf filebeat* && \

apt-get autoremove -y && \

apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

COPY docker-entrypoint.sh /

ENTRYPOINT ["/docker-entrypoint.sh"]

2.2 制作docker-entrypoint.sh

[root@k8smaster01.host.com:/data/Dockerfile/filebeat]# vim docker-entrypoint.sh

#!/bin/bash

ENV=${ENV:-"test"}

PROJ_NAME=${PROJ_NAME:-"no-define"}

MULTILINE=${MULTILINE:-"^\d{2}"}

cat > /etc/filebeat.yaml << EOF

filebeat.inputs:

- type: log

fields_under_root: true

fields:

topic: logm-${PROJ_NAME}

paths:

- /logm/*.log

- /logm/*/*.log

- /logm/*/*/*.log

- /logm/*/*/*/*.log

- /logm/*/*/*/*/*.log

scan_frequency: 120s

max_bytes: 10485760

multiline.pattern: '$MULTILINE'

multiline.negate: true

multiline.match: after

multiline.max_lines: 100

- type: log

fields_under_root: true

fields:

topic: logu-${PROJ_NAME}

paths:

- /logu/*.log

- /logu/*/*.log

- /logu/*/*/*.log

- /logu/*/*/*/*.log

- /logu/*/*/*/*/*.log

- /logu/*/*/*/*/*/*.log

output.kafka:

hosts: ["kafka0.lowan.com:9092", "kafka1.lowan.com:9092", "kafka2.lowan.com:9092"]

topic: k8s-fb-$ENV-%{[topic]}

version: 2.0.0

required_acks: 0

max_message_bytes: 10485760

EOF

set -xe

#If user don't provide any command

# Run filebeat

if [[ "$1" == "" ]]; then

exec filebeat -c /etc/filebeat.yaml

else

# Else allow the user to run arbitraritu commandes like bash

exec "$@"

fi

#增加执行权限

[root@k8smaster01.host.com:/data/Dockerfile/filebeat]# chmod +x docker-entrypoint.sh

2.3 构建filebeat镜像

[root@k8smaster01.host.com:/data/Dockerfile/filebeat]# docker build -t harbor.iot.com/base/filebeat:7.9.0 .

2.4 filebeat接入p003-web

2.4.1 修改p003-web的deployment

[root@k8smaster01.host.com:/data/yaml/p003-web]# vim dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: p003-web

labels:

app: p003-web

spec:

replicas: 1

selector:

matchLabels:

app: p003-web

template:

metadata:

labels:

app: p003-web

spec:

containers:

- name: p003-web

image: harbor.iot.com/p003/alpine_p003_web:V1.1.17

imagePullPolicy: IfNotPresent

ports:

- containerPort: 20300

protocol: TCP

name: tcp20300

command:

- sh

- -c

- "exec nginx -g 'daemon off;'"

volumeMounts:

- name: logm

mountPath: "/opt/P003/log"

- name: p003-web-config-volume

mountPath: "/etc/nginx/conf.d"

- name: p003-mqtt-config-volume

mountPath: "/opt/P003/static-config"

resources:

limits:

cpu: 1000m

memory: 2000Mi

requests:

cpu: 200m

memory: 350Mi

- name: filebeat

image: harbor.iot.com/base/filebeat:7.9.0

imagePullPolicy: IfNotPresent

env:

- name: ENV

value: test

- name: PROJ_NAME

value: p003-web-log

volumeMounts:

- mountPath: /logm

name: logm

volumes:

- name: logm

emptyDir: {}

- name: p003-web-config-volume

configMap:

name: p003-web-nginx-conf

- name: p003-mqtt-config-volume

configMap:

name: p003-web-mqtt-conf

imagePullSecrets:

- name: harbor

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1 # 一次可以添加多少个Pod.

maxUnavailable: 0 # 滚动更新期间最大多少个Pod不可用.

# maxUnavailable设置为0可以完全确保在滚动更新期间服务不受影响,还可以使用百分比的值来进行设置。

revisionHistoryLimit: 5

progressDeadlineSeconds: 600

2.4.2 运行p003-web和filebeat

[root@k8smaster01.host.com:/data/yaml/p003-web]# kubectl apply -f dp.yaml

deployment.apps/p003-web created

三、 部署logstash

3.1准备镜像

docker pull logstash:7.9.0

docker tag docker.io/library/logstash:7.9.0 harbor.iot.com/base/logstash:7.9.0

docker push harbor.iot.com/base/logstash:7.9.0

3.2 创建namespace

[root@k8smaster01.host.com:/data/yaml/logstash]# vim namespace-es.yaml

apiVersion: v1

kind: Namespace

metadata:

name: es

3.3 创建logstash的configmap

[root@k8smaster01.host.com:/data/yaml/logstash]# vim configmap-logstash-conf.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: logstash-conf

namespace: es

data:

logstash.conf: |

input {

kafka {

bootstrap_servers => ["kafka0.lowan.com:9092,kafka1.lowan.com:9092,kafka2.lowan.com:9092"]

client_id => "test"

group_id => "k8s_test"

auto_offset_reset => "latest"

consumer_threads => 4

decorate_events => true

topics_pattern => ["k8s-fb-test-.*"]

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["192.168.13.102:9200"]

index => "k8s-test-%{+YYYY.MM.DD}"

}

}

3.4 创建logstash的service

[root@k8smaster01.host.com:/data/yaml/logstash]# vim svc-logstash.yaml

kind: Service

apiVersion: v1

metadata:

labels:

elastic-app: logstash

name: logstash-service

namespace: es

spec:

ports:

- port: 8080

targetPort: 8080

selector:

elastic-app: logstash

# type: NodePort 我们使用Ingressroute,因此不用nodeport,如测试要使用再开启

3.5 创建logstash的deployment

[root@k8smaster01.host.com:/data/yaml/logstash]# vim deployment-logstash.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

elastic-app: logstash

name: logstash

namespace: es

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

elastic-app: logstash

template:

metadata:

labels:

elastic-app: logstash

spec:

containers:

- name: logstash

image: harbor.iot.com/base/logstash:7.9.0

volumeMounts:

- mountPath: /usr/share/logstash/pipeline/logstash.conf

subPath: logstash.conf

name: logstash-conf

ports:

- containerPort: 8080

protocol: TCP

env:

- name: "XPACK_MONITORING_ELASTICSEARCH_URL"

value: "http://192.168.13.102:9200"

securityContext:

privileged: true

resources:

limits:

cpu: 1000m

memory: 4000Mi

requests:

cpu: 200m

memory: 350Mi

volumes:

- name: logstash-conf

configMap:

name: logstash-conf

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

3.6 应用资源配置清单

[root@k8smaster01.host.com:/data/yaml/logstash]# kubectl apply -f namespace-es.yaml

[root@k8smaster01.host.com:/data/yaml/logstash]# kubectl apply -f svc-logstash.yaml

[root@k8smaster01.host.com:/data/yaml/logstash]# kubectl apply -f configmap-logstash-conf.yaml

[root@k8smaster01.host.com:/data/yaml/logstash]# kubectl apply -f deployment-logstash.yaml

3.7 检查日志

curl http://192.168.13.102:9200/_cat/indices?v

[root@k8smaster02.host.com:/opt/zookeeper/logs]# curl http://192.168.13.102:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana-event-log-7.9.0-000001 EqQ2A3LQTBypB-kCaa-toA 1 0 1 0 5.5kb 5.5kb

green open .apm-agent-configuration _ib6otnRSQGrG9DHhqcbmA 1 0 0 0 208b 208b

green open .monitoring-es-7-2020.10.17 sNsj888YQJi_rUet3WcXYQ 1 0 25850 39780 46mb 46mb

green open .monitoring-es-7-2020.10.16 _HVABUjLT8Cw25z8Jaicww 1 0 224746 32566 126mb 126mb

green open .kibana_1 Rys0b8voS-iZuowNirBd2Q 1 0 351 208 22.8mb 22.8mb

green open k8s-test-2020.10.289 E3BDeVdbR_2hLvx-z-stqA 5 0 53860699 0 10gb 10gb

green open k8s-test-2020.10.288 vHn9wY67Rk2GDO2bOSCJ0w 5 0 166688010 0 30.9gb 30.9gb

green open k8s-test-2020.10.287 K1g7iXDhQ4eqXvmtY9r2zA 5 0 474 0 410.6kb 410.6kb

green open .monitoring-es-7-2020.10.15 8xrnlUV9Qo6Xj491gFPqTQ 1 0 198803 267747 126mb 126mb

green open .apm-custom-link JJaYo9LMTO6gXlHIYizdmw 1 0 0 0 208b 208b

green open .monitoring-es-7-2020.10.14 SgzK0kuaTpa1O8h9fJFs0w 1 0 172732 206514 111.5mb 111.5mb

green open kibana_sample_data_ecommerce Cl-JqkSXT6-seICbCKNe_w 1 0 4675 0 4.8mb 4.8mb

green open .kibana_task_manager_1 r-x-Ev6ERImCTGYHm0bs-w 1 0 6 21984 2.1mb 2.1mb

green open .monitoring-es-7-2020.10.13 NwwQvg1cT6q2rblfz_oKlQ 1 0 143417 129339 80.6mb 80.6mb

green open k8s-test-2020.10.291 dpbrdYl-Qv-RzkEZh0H-pQ 5 0 20957304 0 6.7gb 6.7gb

green open .monitoring-es-7-2020.10.12 9RykgLDhRnGhvq-BAon-pA 1 0 55080 39310 23.8mb 23.8mb

green open k8s-test-2020.10.290 LavDmYSoQSaWuL5VTuOsSA 5 0 46987809 0 13.2gb 13.2gb

green open .monitoring-kibana-7-2020.10.12 00qjijh-QIaKWYs8d2N1bw 1 0 7868 0 1.3mb 1.3mb

green open .async-search q-_fN4U-SXuOStIP48Ytog 1 0 17 1678 236.6mb 236.6mb

green open .monitoring-kibana-7-2020.10.13 aDn677DrQYyV0KhF0KeZVQ 1 0 17278 0 2.7mb 2.7mb

green open .monitoring-kibana-7-2020.10.14 Kn32Cy6yTIm9FCMnfG57gg 1 0 17280 0 2.9mb 2.9mb

green open .monitoring-kibana-7-2020.10.15 p594NX1iScanKZWevtQjSg 1 0 17278 0 2.8mb 2.8mb

green open .monitoring-kibana-7-2020.10.16 qwuLiChATWyC4i1cMrSTHA 1 0 17278 0 2.9mb 2.9mb

green open .monitoring-kibana-7-2020.10.17 Q-cOVGdoToqWjeDQ1hq1Jw 1 0 1776 0 640.2kb 640.2kb

四、部署kibana

4.1 准备镜像

docker pull kibana:7.9.0

docker tag docker.io/library/kibana:7.9.0 harbor.iot.com/base/kibana:7.9.0

docker push harbor.iot.com/base/kibana:7.9.0

4.2 创建kibana的service

[root@k8smaster01.host.com:/data/yaml/kibana]# vim svc-kibana.yaml

kind: Service

apiVersion: v1

metadata:

labels:

elastic-app: kibana

name: kibana-service

namespace: es

spec:

ports:

- protocol: TCP

port: 5601

targetPort: 5601

selector:

elastic-app: kibana

4.3 创建kibana的configMap

[root@k8smaster01.host.com:/data/yaml/kibana]# vim configmap-kibana.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kibana-config

namespace: es

data:

kibana.yml: |

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://es.lowan.com:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

4.4 创建kibana的deployment

[root@k8smaster01.host.com:/data/yaml/kibana]# vim deployment-kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

elastic-app: kibana

name: kibana

namespace: es

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

elastic-app: kibana

template:

metadata:

labels:

elastic-app: kibana

spec:

containers:

- name: kibana

image: harbor.iot.com/base/kibana:7.9.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 5601

protocol: TCP

env:

- name: "elasticsearch.hosts"

value: "http://es.lowan.com:9200"

resources:

limits:

cpu: 2000m

memory: 4000Mi

requests:

cpu: 200m

memory: 350Mi

volumeMounts:

- name: kibana-config-v

mountPath: /usr/share/kibana/config/kibana.yml

subPath: kibana.yml

volumes:

- name: kibana-config-v

configMap:

name: kibana-config

items:

- key: kibana.yml

path: kibana.yml

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

4.5 创建kibana的ingressroute

[root@k8smaster01.host.com:/data/yaml/kibana]# vim ingressroute-kibana.yaml

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

labels:

app: kibana

name: kibana-ingress

namespace: es

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: web

kubernetes.io/ingress.class: "traefik"

spec:

entryPoints:

- web

routes:

- match: Host(`kibana.lowan.com`) && PathPrefix(`/`)

kind: Rule

services:

- name: kibana-service

port: 5601

4.6 配置DNS域名解析

[root@lb03.host.com:/var/named]# vim lowan.com.zone

$ORIGIN lowan.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.lowan.com. dnsadmin.lowan.com. (

2020101011 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ) ; minimum (1 day)

NS dns.lowan.com.

$TTL 60 ; 1 minutes

dns A 192.168.13.99

traefik A 192.168.13.100

grafana A 192.168.13.100

blackbox A 192.168.13.100

prometheus A 192.168.13.100

kubernetes A 192.168.13.100

rancher A 192.168.13.97

jenkins A 192.168.13.100

mysql A 192.168.13.100

p003web A 192.168.13.100

kibana A 192.168.13.100

:x 保存退出

[root@lb03.host.com:/var/named]# systemctl restart named

[root@lb03.host.com:/var/named]# systemctl status named

[root@lb03.host.com:/var/named]# dig -t A kibana.lowan.com @192.168.13.99 +short

192.168.13.100

4.7 应用资源配置清单

[root@k8smaster01.host.com:/data/yaml/kibana]# kubectl apply -f svc-kibana.yaml

[root@k8smaster01.host.com:/data/yaml/kibana]# kubectl apply -f configmap-kibana.yaml

[root@k8smaster01.host.com:/data/yaml/kibana]# kubectl apply -f deployment-kibana.yaml

[root@k8smaster01.host.com:/data/yaml/kibana]# kubectl apply -f ingressroute-kibana.yaml

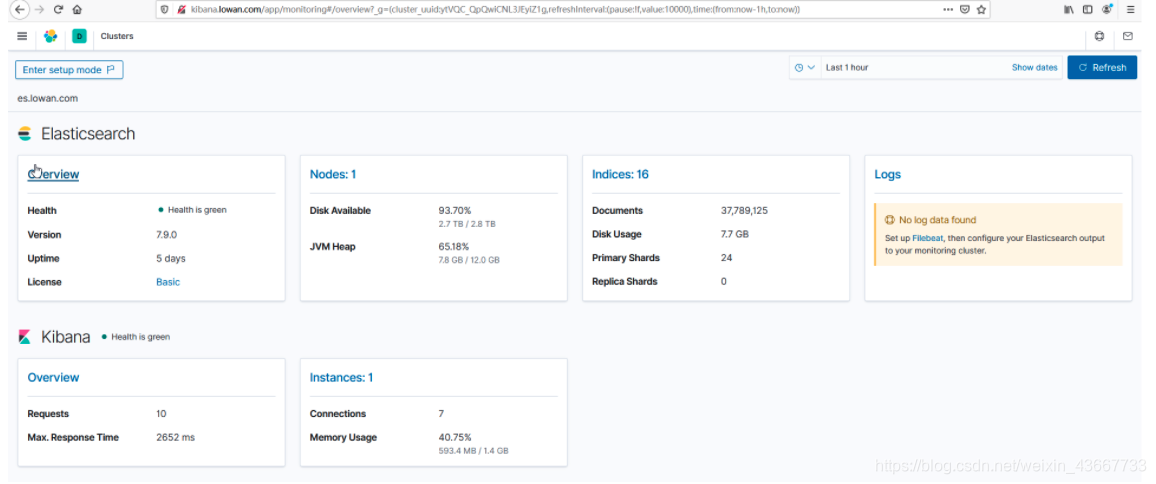

4.8 访问验证

访问: http://kibana.lowan.com

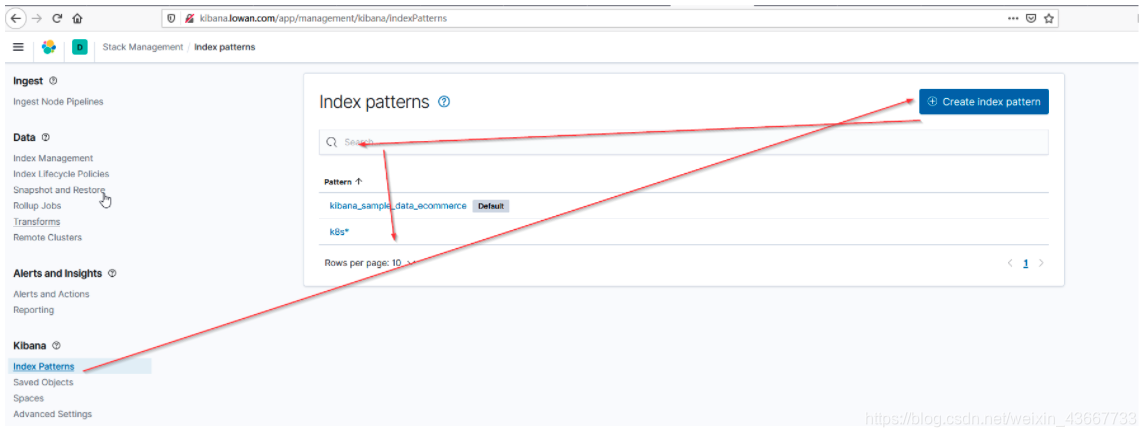

a.配置添加循序为: Managerment->stack monitoring

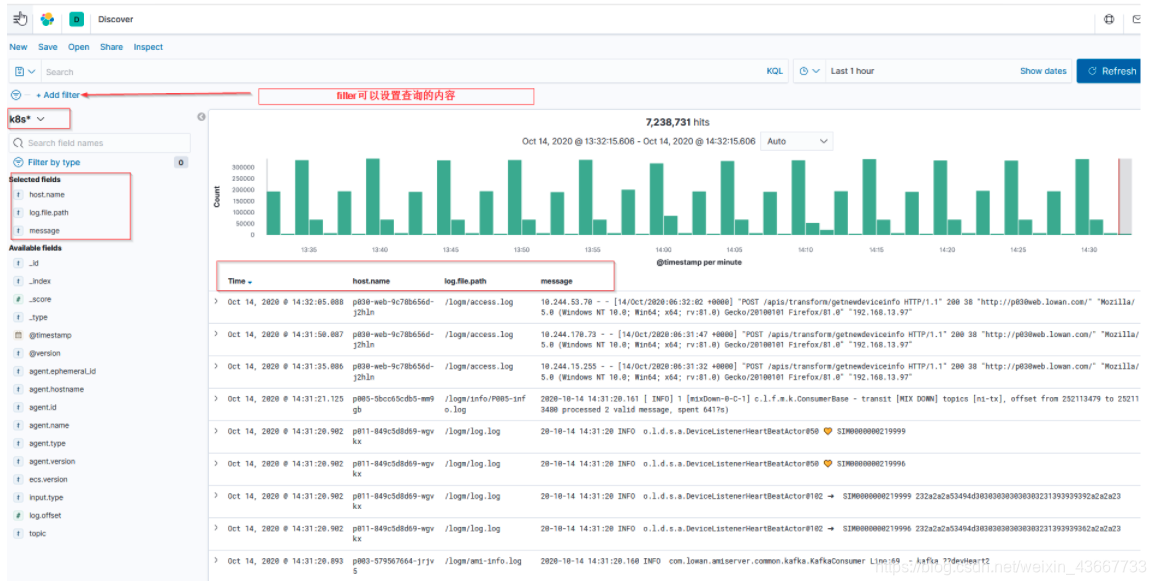

b.配置添加循序为: Managerment->stack management->index patterns

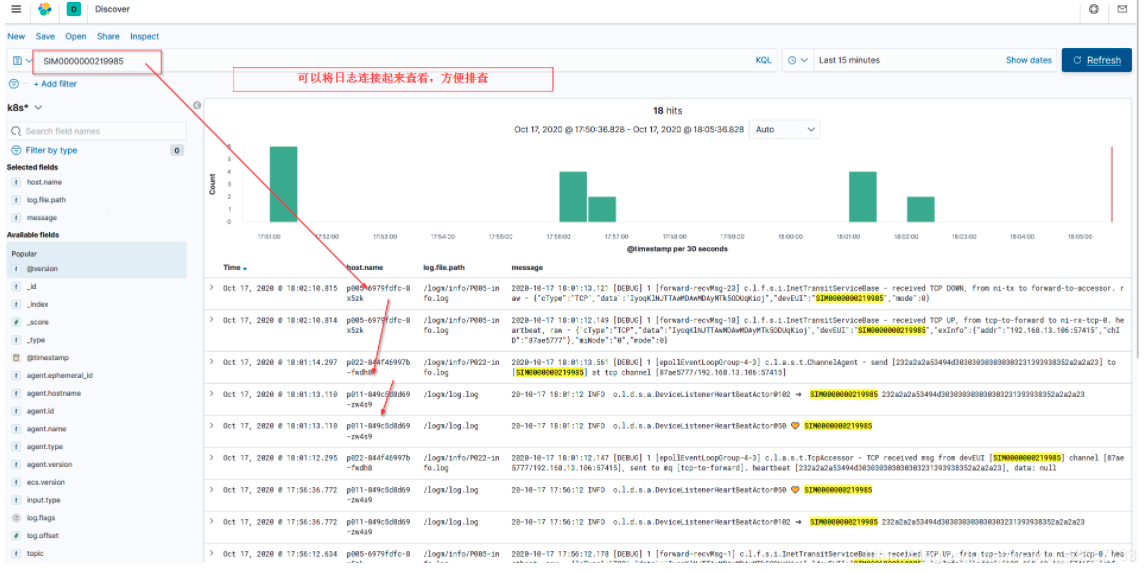

c.配置添加循序为:Kibana->discover-> 选择k8s* 为了便于观测 add “host.name log.file.path message"

后续参考(集群):

01 kubernetes二进制部署

02 kubernetes辅助环境设置

03 K8S集群网络ACL规则

04 Ceph集群部署

05 部署zookeeper和kafka集群

06 部署日志系统

07 部署Indluxdb-telegraf

08 部署jenkins

09 部署k3s和Helm-Rancher

10 部署maven软件

所属网站分类: 技术文章 > 博客

作者:怎么没有鱼儿上钩呢

链接:http://www.javaheidong.com/blog/article/207750/8aa0b930666ef0448669/

来源:java黑洞网

任何形式的转载都请注明出处,如有侵权 一经发现 必将追究其法律责任

昵称:

评论内容:(最多支持255个字符)

---无人问津也好,技不如人也罢,你都要试着安静下来,去做自己该做的事,而不是让内心的烦躁、焦虑,坏掉你本来就不多的热情和定力